Project 5

Fun With Diffusion Models! - Project Spec

- Project 5A: The Power of Diffusion Models!

- Project 5B: Diffusion Models from Scratch!

Project 5A: The Power of Diffusion Models!

Part 0: Setup

In the first part of the project, I played around with an already trained stable diffusion model, DeepFloyd IF.

To try out the diffusion model, I tried the following 3 prompts with the following num_inference_steps values. A higher number of steps resulted in higher quality output. I used a random seed of 180.

20 steps:

| A man wearing a hat | A rocket ship | An oil painting of a snowy mountain village |

|---|---|---|

|

|

|

100 steps:

| A man wearing a hat | A rocket ship | An oil painting of a snowy mountain village |

|---|---|---|

|

|

|

Part 1: Sampling Loops

1.1 Implementing the Forward Process

First, I implemented a function forward which takes in an original image im and time step t. A higher t means more noise was added. Mathematically, this is represented as follows:

where:

- \(x_t\) is the image at time step \(t\) (\(x_0\) is the original image)

- \(\bar{\alpha_t}\) is a noise coefficient chosen by the people who trained DeepFloyd

- \(\epsilon \sim \mathcal{N}(0, 1)\) is Gaussian noise

| Original | t = 250 |

t = 500 |

t = 750 |

|---|---|---|---|

|

|

|

|

1.2 Classical Denoising

Since stable diffusion is essentially trying to denoise an image step by step, first, I tried a classical denoising technique to attempt to recover the original image: Gaussian blur. As expected, the denoised output does not work very well. We get rid of the noise, but we also get rid of the “signal” (e.g. the actual original image).

| t | Noisy | Denoised |

|---|---|---|

| 250 |  |

|

| 500 |  |

|

| 750 |  |

|

1.3 One-Step Denoising

Next I used a pretrained diffusion model (a UNet trained on a large dataset of pairs of \(x_0\) and \(x_t\) images) to denoise the noisy images. The UNet uses estimates of the noise at a particular timestep to denoise the image. The output is noticeably better than using Gaussian blur, but at higher t values (such as t = 750) the denoised output tower starts to look quite different from the original Campanile tower.

For reference, here is the original image again:

| t | Noisy | Denoised |

|---|---|---|

| 250 |  |

|

| 500 |  |

|

| 750 |  |

|

1.4 Iterative Denoising

To solve the issue mentioned in 1.3, we can use iterative denoising with a stride of 30 time steps, starting at t = 990 to t = 0. I implemented the function iterative_denoise which uses the following formula:

where:

- \(x_t\) is the same as in 1.1

- \(x_{t'}\) is the noisy image at time step \(t'\) where \(t' < t\) (e.g. a slightly less noisy image)

- \(\bar{\alpha_t}\) is the same as in 1.1

- \(\alpha_t\) = \(\frac{\bar{\alpha_t}}{\bar{\alpha_{t'}}}\)

- \(\beta_t\) = \(1 - \alpha_t\)

- \(x_0\) is the same as in 1.1

- \(v_\sigma\) is random noise

See also: Denoising Diffusion Probabilistic Models by Jonathan Ho, Ajay Jain, and Pieter Abbeel.

The following shows the results of iterative denoising for every 5 loops:

t = 690 |

t = 540 |

t = 390 |

t = 240 |

t = 90 |

|---|---|---|---|---|

|

|

|

|

|

Here are the other outputs for reference:

| Original | Gaussian Blur Denoised | One-Step Denoised | Iterative Denoised |

|---|---|---|---|

|

|

|

|

We can observe that the iteratively denoised image produces the best result.

1.5 Diffusion Model Sampling

Stable diffusion works by converting noise to completely denoised images. Thus, I can use my iterative_denoise function from 1.4 to generate images from completely noisy images (e.g. an image with pixels from a Gaussian distribution). Here are 5 generated images:

| Sample 1 | Sample 2 | Sample 3 | Sample 4 | Sample 5 |

|---|---|---|---|---|

|

|

|

|

|

1.6 Classifier-Free Guidance (CFG)

Some of the images in 1.5 are non-sensical, so to improve image output quality (at the expense of image diversity), I used a technique called Classifier-Free Diffusion Guidance developed by Jonathan Ho and Tim Salimans.

In CFG, we compute a conditional and unconditional noise estimate \(\epsilon_c\) and \(\epsilon_u\), respectively. Then we let our new noise estimate be:

\[\epsilon = \epsilon_u + \gamma (\epsilon_c - \epsilon_u)\]where \(\gamma\) controls the strength of the CFG. When \(\gamma \gt 1\), we get higher quality images, such as the 5 random samples below:

| Sample 1 | Sample 2 | Sample 3 | Sample 4 | Sample 5 |

|---|---|---|---|---|

|

|

|

|

|

1.7 Image-to-image Translation

Below are the results of taking 3 original images, adding varying levels of noise, and then iteratively denoising the noisy images with CFG. i_start denotes where we begin the iterative denoising process (e.g. lower value means we denoised with a few iterations and higher value means we denoised with a lot of iterations).

In addition to the given test image of the Campanile, I chose an image of the Golden Gate Bridge and the Eiffel Tower that were resized to \(64 \times 64\).

i_start = 1 |

i_start = 3 |

i_start = 5 |

i_start = 7 |

i_start = 10 |

i_start = 20 |

Original |

|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

See also: SDEdit algorithm by Meng et al. (2022).

1.7.1 Editing Hand-Drawn and Web Images

In this subpart, I drew 2 images and found 1 image from the web and utilized the diffusion model to edit the images using the same method as above.

i_start = 1 |

i_start = 3 |

i_start = 5 |

i_start = 7 |

i_start = 10 |

i_start = 20 |

Original |

|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1.7.2 Inpainting

Next I implemented the inpainting part of this paper, RePaint: Inpainting using Denoising Diffusion Probabilistic Models by Andreas Lugmayr, Martin Danelljan, Andres Romero, Fisher Yu, Radu Timofte, and Luc Van Gool. (Using this paper, Scene Completion Using Millions of Photographs by James Hays and Alexei Efros, for inspiration on how to “complete” the scene.)

Given an image, \(x_{orig}\), and binary mask \(m\), I created a new image with the same content as the original where \(m = 0\), but new content where \(m = 1\). To do this, we run the diffusion denoising loop and only keep the part where the mask is 1:

\[x_t \leftarrow m x_t + (1 - m) \text{forward}(x_{orig}, t)\]| Campanile with new top | Cat with dog head | Oski with dog head |

|---|---|---|

|

|

|

1.7.3 Text-Conditional Image-to-image Translation

In this part, I changed the text prompt from "a high quality image" to "a rocket ship", obtaining the results below with different levels of noise for the Campanile image and some images from the web.

i_start = 1 |

i_start = 3 |

i_start = 5 |

i_start = 7 |

i_start = 10 |

i_start = 20 |

Original |

|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1.8 Visual Anagrams

In this part, I implemented Visual Anagrams from this paper, Visual Anagrams: Generating Multi-View Optical Illusions with Diffusion Models by Daniel Geng, Inbum Park, and Andrew Owens. To do this, we denoise like before but we alternate between 2 different text prompts and flip the image upside-down. That way, the final image right-side up looks like the first prompt, and upside-down looks like the second prompt.

The full algorithm is as follows:

\[\epsilon_1 = \text{UNet}(x_t, t, p_1)\] \[\epsilon_2 = \text{flip}(\text{UNet}(\text{flip}(x_t), t, p_2))\] \[\epsilon = (\epsilon_1 + \epsilon_2) / 2\]where flip is a function that flips the image and p_i are the 2 text prompts. Our final noise estimate is \(\epsilon\), which is the average of the 2 individual noise estimates.

| Prompt 1 | Image | Prompt 2 | Flipped Image |

|---|---|---|---|

| an oil painting of an old man |  |

an oil painting of people around a campfire |  |

| an oil painting of a snowy mountain village |  |

a photo of the amalfi cost* |  |

| a lithograph of waterfalls |  |

a lithograph of a skull |  |

*This should say “coast” but that’s how the text embeddings were loaded in the starter code provided by staff :)

1.9 Hybrid Images

In this part, I implemented this paper, Factorized Diffusion: Perceptual Illusions by Noise Decomposition by Daniel Geng, Inbum Park, and Andrew Owens. Specifically, I used the diffusion model to create hybrid images like in Project 2.

Similar to the visual anagrams, we just have to adjust our noise estimate as follows:

\[\epsilon_1 = \text{UNet}(x_t, t, p_1)\] \[\epsilon_2 = \text{UNet}(x_t, t, p_2)\] \[\epsilon = f_{\text{lowpass}}(\epsilon_1) + f_{\text{highpass}}(\epsilon_2)\]where \(f_{\text{lowpass}}\) is a low pass filter and \(f_{\text{highpass}}\) is a high pass filter. I used the suggested Gaussian blur of kernel size 33 and \(\sigma = 2\).

| Prompt 1 | Prompt 2 | Hybrid Image |

|---|---|---|

| a lithograph of waterfalls | a lithograph of a skull |  |

| an oil painting of people around a campfire | a lithograph of a skull |  |

| a man wearing a hat | a lithograph of waterfalls |  |

Project 5B: Diffusion Models from Scratch!

In this part of the project, I wrote a stable diffusion model from scratch trained on the MNIST dataset.

Part 1: Training a Single-Step Denoising UNet

1.1: Implementing the UNet

Given a noisy image \(z\), I trained a denoiser \(D_\theta\) that maps \(z\) to a clean image \(x\) by optimizing L2 loss:

\[L = \mathbb{E}_{z, x}||D_\theta(z) - x ||^2\]I did this by implementing a UNet, from this paper: U-Net: Convolutional Networks for Biomedical Image Segmentation by Olaf Ronneberger, Philipp Fischer, and Thomas Brox.

1.2 Using the UNet to Train a Denoiser

To begin, I visualized how an image can become more and more noisy by implementing a function add_noise which takes a clean image x and adds Gaussian noise:

where \(\sigma = [0.0, 0.2, 0.4, 0.6, 0.8, 1.0]\), \(\epsilon \sim \mathcal{N}(0, I)\), and normalized \(x \in [0, 1)\).

1.2.1: Training

Next, I began training my unconditioned UNet to be able to denoise an image in 1 step by training on pairs of clean and noisy images where \(\sigma = 0.5\). I used the following hyperparameters, as suggested on the spec:

- Batch size: 256

- Epochs: 5

- Hidden dimension

D: 128 - Optimizer: Adam

- Learning rate:

1e-4

Here is my training loss graph:

Here are the results of the denoiser at epoch 1 and epoch 5:

1.2.2: Out-of-Distribution Testing

To see if the denoiser can generalize to other levels of noise, I kept the image the same and varied \(\sigma\). The denoised images can be seen below, and are not bad but also not great (this is to be fixed later in the project).

Part 2: Training a DDPM Denoising U-Net

In this part, I implemented DDPM from this paper, Denoising Diffusion Probabilistic Models by Jonathan Ho, Ajay Jain, and Pieter Abbeel.

Instead of estimating the denoised image, we can train a UNet to estimate the noise that was added to the image. This changes the loss function we’re trying to minimize accordingly:

\[L = \mathbb{E}_{z, x}||\epsilon_\theta(z) - \epsilon||^2\]where \(\epsilon_\theta\) is a UNet trained to predict noise. Additionally, to produce better results we do iterative denoising instead of one-step denoising. Like in Part A of the project, we use the following formula to compute iteratively noisier images in the forward pass:

\[x_t = \sqrt{\bar{\alpha_t}} x_0 + \sqrt{1 - \bar{\alpha_t}} \epsilon\]where:

- \(x_t\) is the image at time step \(t\) (\(x_0\) is the original image)

- \(\bar{\alpha_t}\) is a noise coefficient computed accordingly:

- \(\beta_t\) is a list of numbers of length 300 (

t = 299tot = 0) such that \(\beta_0 = 0.0001\) and \(\beta_T = 0.02\) and all other elements are evenly spaced between the two - \[\alpha_t = 1 - \beta_t\]

- \[\bar{\alpha_t} = \Pi_{s = 1}^{t} \alpha_s\]

- \(\beta_t\) is a list of numbers of length 300 (

- \(\epsilon \sim \mathcal{N}(0, 1)\) is Gaussian noise

2.1: Adding Time Conditioning to UNet

We can add time conditioning to the UNet by modifying the loss function again:

\[L = \mathbb{E}_{z, x}||\epsilon_\theta(x_t, t) - \epsilon||^2\]and adding an FCBlock to the unconditioned UNet made up of some nn.Linear and nn.GELU elements (see the project spec for more details).

2.2: Training the UNet

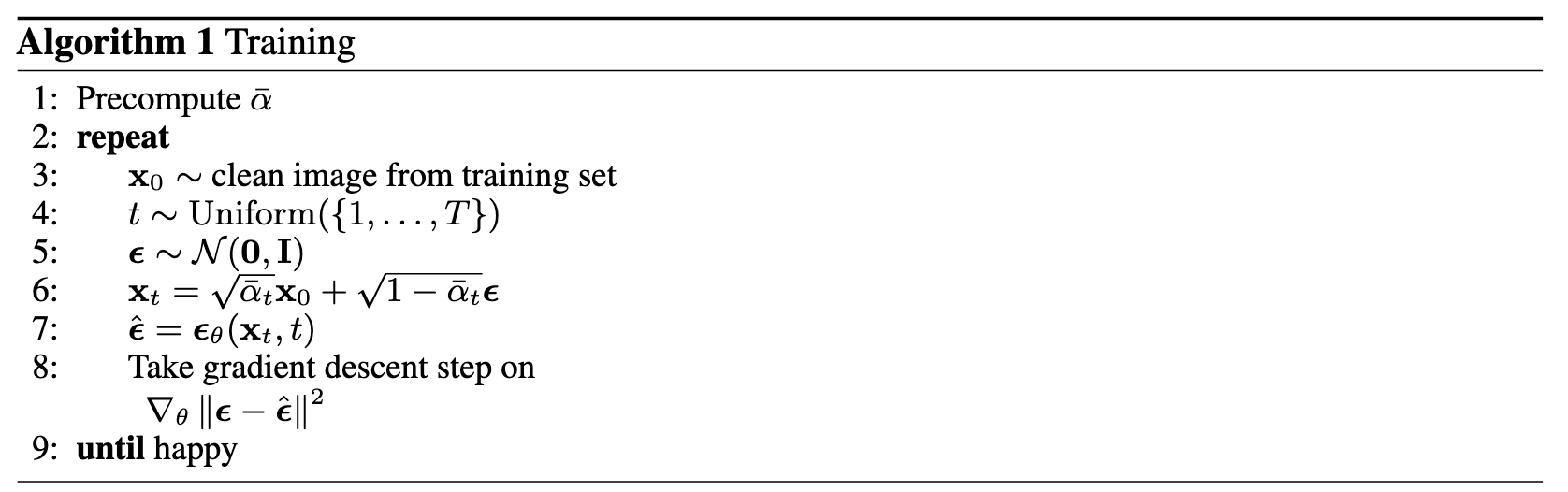

I implemented this algorithm:

to perform the forward pass of the UNet. I trained the network with the following hyperparameters, as suggested:

- Batch size: 128

- Epochs: 20

- Hidden dimension

D: 64 - Optimizer: Adam

- Learning rate:

1e-3 - Learning rate decay scheduler: ExponentialLR with

gamma = 0.1 ** (1.0 / num_epochs)

Below is my training loss curve:

2.3: Sampling from the UNet

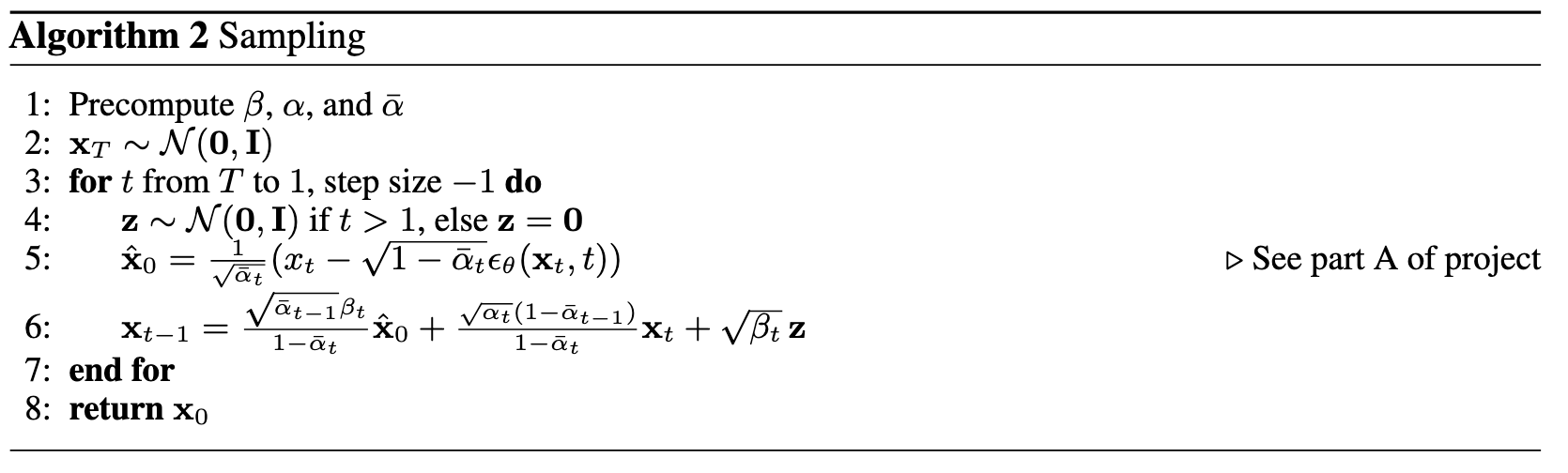

I then implemented this algorithm:

to generate/sample 10 digits from the UNet at epoch = 5:

and at epoch 20:

One thing I had to be very careful about was setting the seed to generate the starting \(x_t\) (I used the current epoch index) and setting the seed to generate \(z\) (I used the current \(t\) value).

2.4: Adding Class-Conditioning to UNet

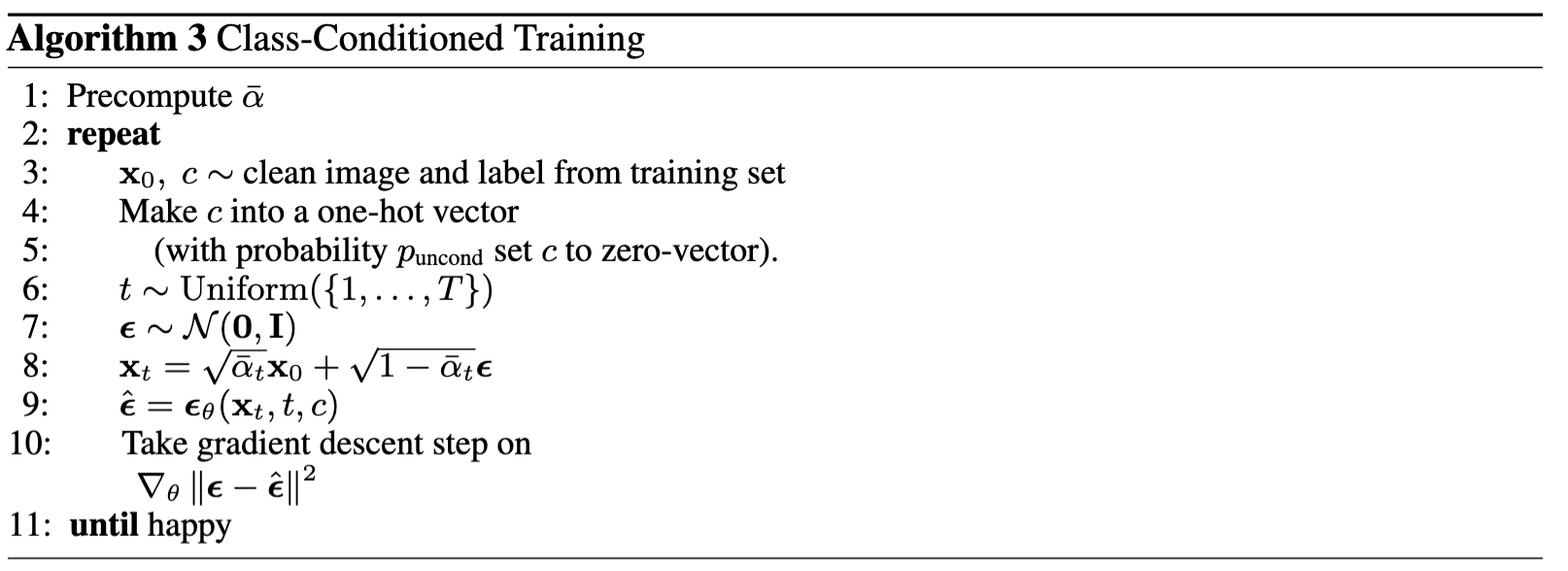

To be able to generate a particular digit (rather than any digit), I implemented class conditioning by adding 2 more FCBlocks in a similar way as before (see the spec for details). Additionally, I dropped out (e.g. turned that image’s one-hot-encoded vector to all zeros in the batch) the class label for 10% of images in the batch. I also used Classifier-Free Guidance to estimate noise from both the unconditioned and conditioned UNet.

I implemented this algorithm:

And had this training loss curve with the same hyperparameters as before:

2.5: Sampling from the Class-Conditioned UNet

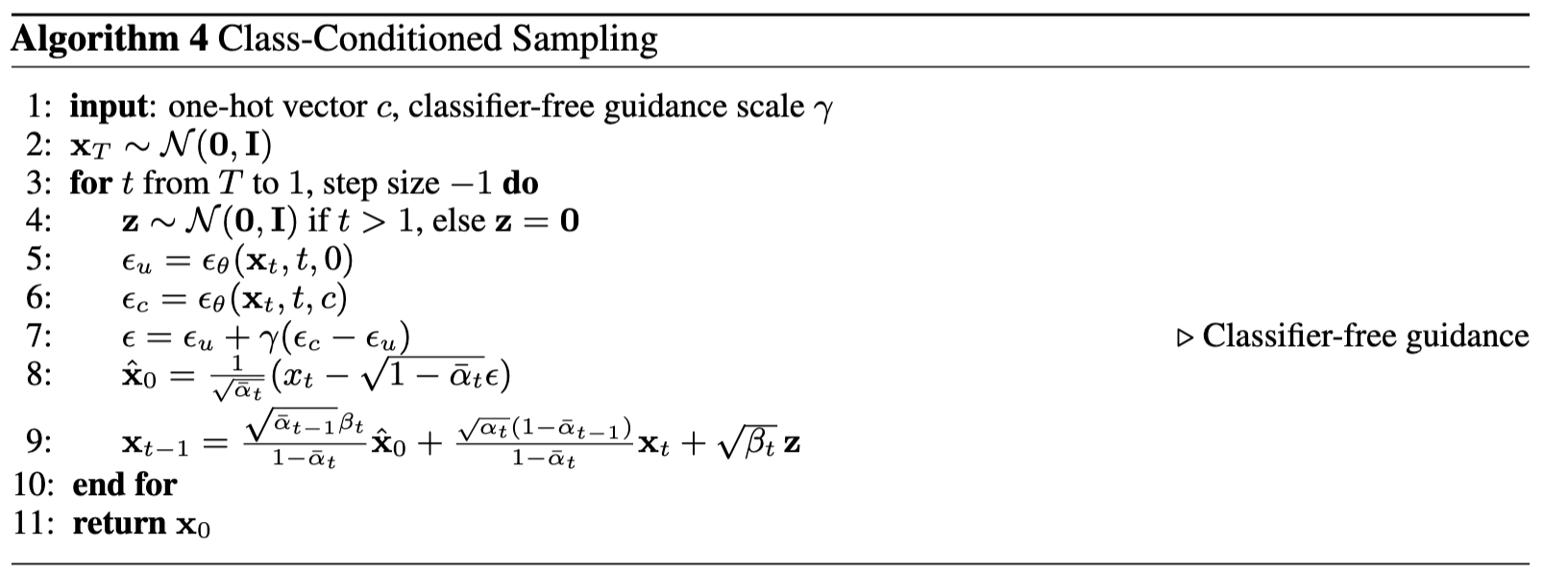

I then implemented this algorithm:

to generate/sample digits 0-9 in that order at epoch 5:

and at epoch 20:

Once again, I had to be very careful about was setting the seed to generate the starting \(x_t\) (I used epoch + j where epoch is the epoch index and j is an index from [0, 3] since we have to sample digits 0-9 at least 4 times) and setting the seed to generate \(z\) (I used the current \(t\) value).

Part 3: Bells & Whistles

Sampling Gifs

I created gifs of the sampling process from t = 299 to t = 0 for the time conditioned UNet at epoch 5 (first row) and epoch 20 (second row):

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

I did the same for the class conditioned UNet:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|